A Large Language Model Walks Into an Archive...

We’re now two years into the Generative AI Era in higher education. For those of us in the humanities, much of the conversation around Large Language Models (LLMs) has revolved around teaching - the tenor of which is captured in headlines like “The College Essay is Dead” or “Another Disastrous Year of ChatGPT School Is Beginning.” Which, fair enough! LLMs pose all sorts of new pedagogical challenges for writing-based disciplines. But their impact on humanities research has received far less attention.

This is the first in a series of posts I hope to write about using common, off-the-shelf LLMs for historical research. I’m not writing this for folks who know how to, say, use OpenAI’s API to implement a RAG model for a historical corpus (if you know what that means, good for you - but this post isn’t for you). I’m writing for those of you who’ve read plenty of New York Times articles about ChatGPT but haven’t used it all that much themselves. You’re probably skeptical of the hype around these tools and wonder whether they can actually help you do your work. If this describes you, keep reading.

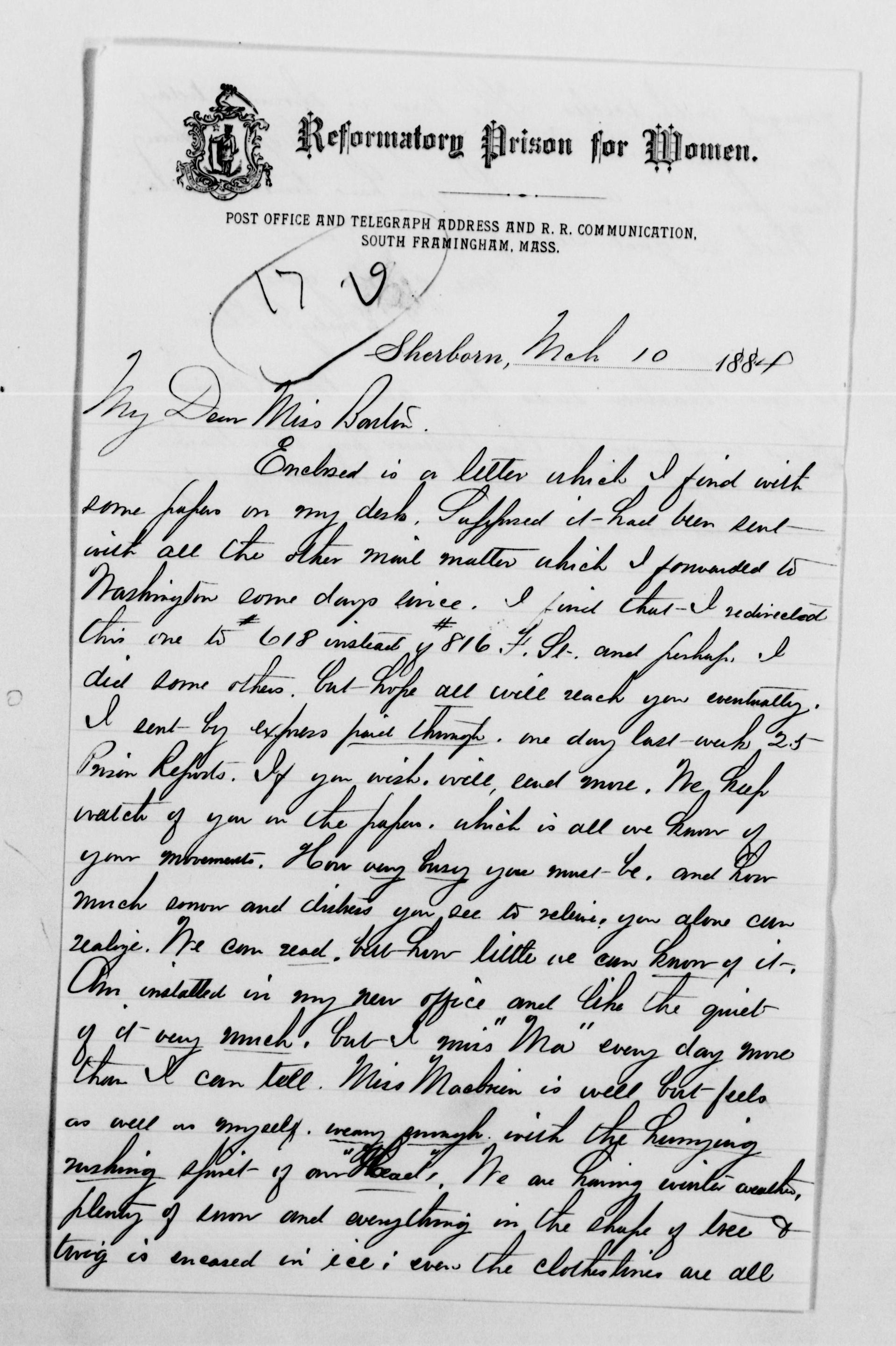

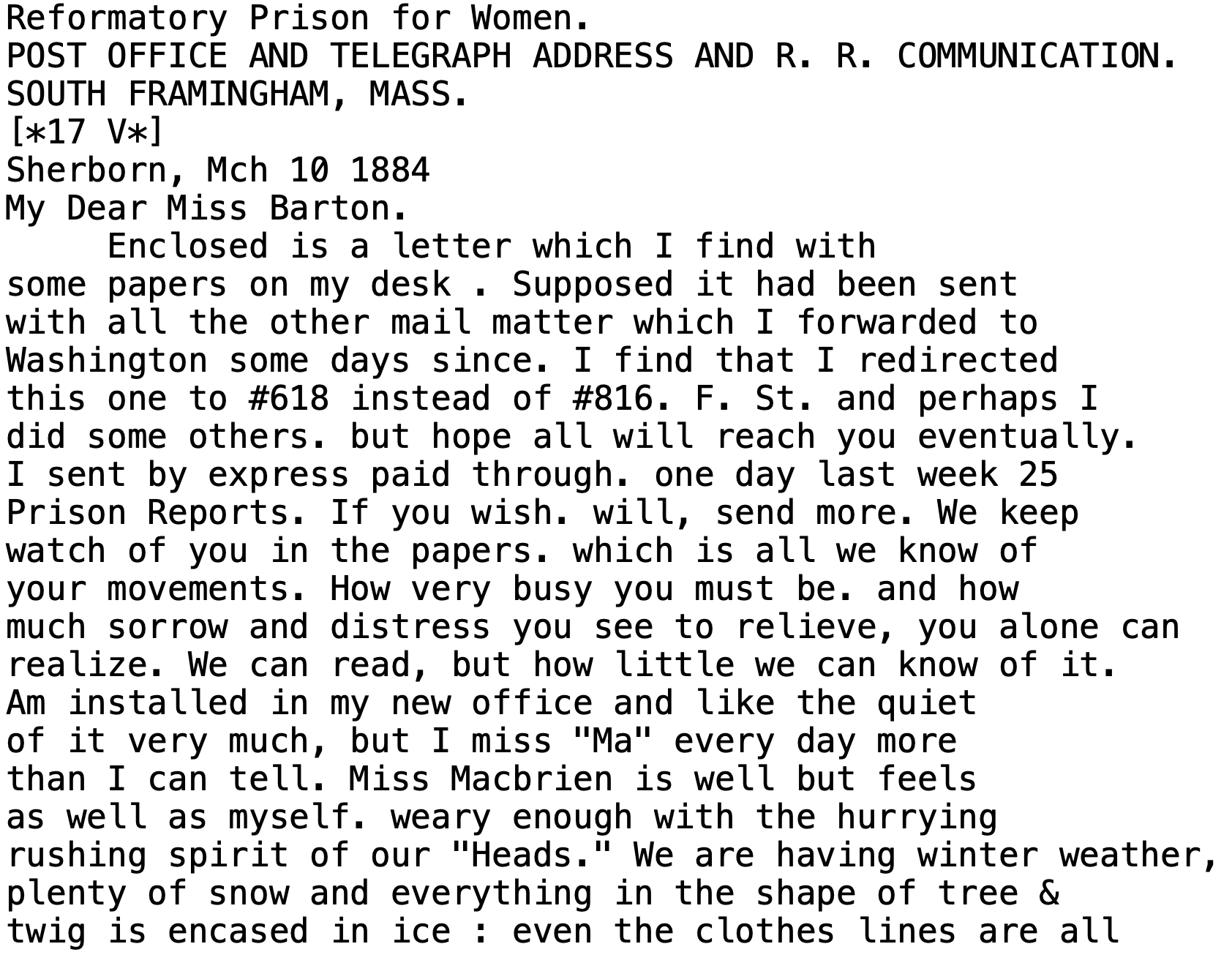

Today’s post focuses on using LLMs to transcribe and interpret primary sources. For most of my career as a digital historian, computational analysis has focused on “machine-readable” sources, or documents that can be easily transformed into data. A typed government report can be automatically processed with Optical Character Recognition (OCR), whereas a handwritten letter has to be transcribed by a human.

The transcription was completed through the Library of Congress's crowdsourced transcription project, Clara Barton: Angel of the Battlefield.

But what counts as “machine-readable” is rapidly changing. To illustrate what I mean, I’ll use a concrete example from my own work. While researching my book, I spent a lot of time mapping large-scale spatial patterns in the 19th-century postal system as it spread across the American West; what was missing from this approach was a more qualitative, intimate look at how individual people and families actually used the US Post.

Enter the Curtis family: four orphans who used the mail to stay connected as they moved across the western United States. In 2015, I spent several days at the Huntington Library typing on my laptop to transcribe their correspondence. My favorite document from this collection was a letter written by Benjamin Curtis to his sister Delia Curtis. In it, Benjamin announced the birth of his daughter on a hot September day in 1886:

Take a peek through any historian’s hard drive and you will probably find thousands of similar photos form archive trips. Transcribing these documents has long been a bottleneck in the research process: a tedious but necessary step between photographing a primary source and actually using it in our work. When I transcribed Benjamin’s letter at the Huntington Library, it wasn’t particularly difficult work, but it was laborious - especially after three days of hunching over the same desk squinting at letter after letter.

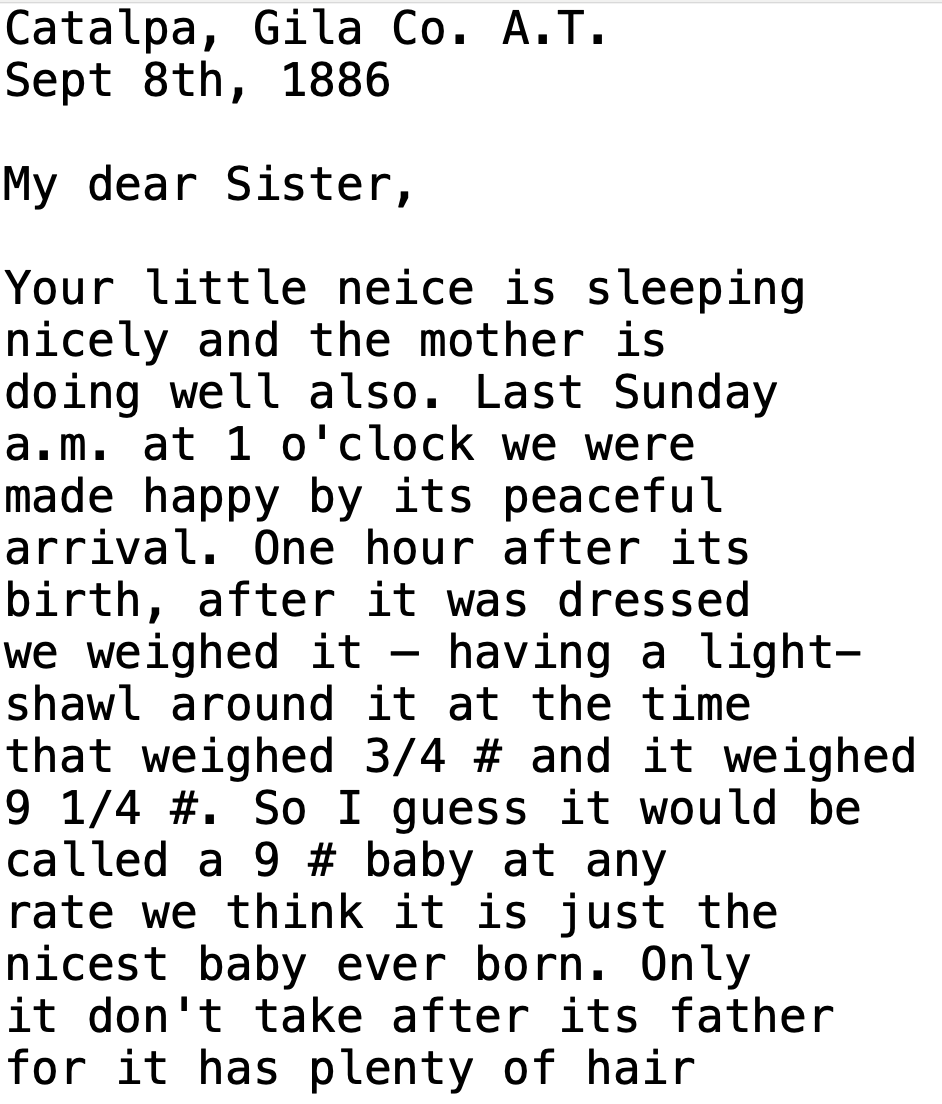

Document transcription is exactly the sort of time-consuming, boring task we would want to hand off to a Large Language Model. But can it actually do the job? To find out, I chose (womewhat at random) a Custom GPT that was designed specifically for transcribing handwritten text. I uploaded my photos of Benjamin’s 1886 letter and, a few seconds later, had a shockingly accurate, well-formatted transcription. Here’s a sample of that transcription:

This is wild! If you had told me just a couple of years ago that a general-use tool could - in a matter of seconds - generate a largely error-free transcription of a handwritten letter from the 19th century? I would have laughed you out of the room! Just as impressively, ChatGPT was able to take its transcription and immediately generate an accurate, concise summary of Benjamin’s letter:

In a letter dated September 8, 1886, from Catalpa, Arizona Territory, Benjamin Wisner Curtis joyfully announces the birth of his daughter, Selia Etta, to his sister, L. Delia Augusta Curtis. He describes the baby’s healthy condition, with dark hair, and notes that both mother and child are doing well despite the hot weather. The letter highlights the involvement of multiple generations in the family’s care and the importance of familial bonds during this significant event.

This example points to the potential of using LLMs for historical research. Their growing ability to process both natural language (text) and “multimodal” sources (non-textual sources like photographs, video, or audio), makes them a powerful tool for working with archival material. And unlike other kinds of digital tools, ChatGPT doesn’t require a user to learn any sophisticated technical skills.

Now for the caveats. Benjamin’s letter is in some ways an ideal source for this kind of analysis. I’ve tried using ChatGPT to transcribe more challenging documents (ex. a 17th-century handwritten letter in Spanish) and the transcription quality degrades dramatically, sometimes to the point of gibberish. This plug-and-play approach won’t work for every type of source. It’s also not yet scalable. Don’t expect to be able to dump hundreds of documents into ChatGPT and receive flawless, neatly formatted transcriptions.

Moreover, the tool will make mistakes, even with an “ideal” source like Benjamin Curtis’s letter. To take one example: towards the end of his letter, Benjamin revealed that he and his wife Mary had decided to name their newborn daughter “Delia Etta”. ChatGPT, however, transcribed this as “Selia Etta”. It’s a minor and understandable error - a handwritten “D” looks an awful lot like a handwritten “S”. But this mistake had implications for ChatGPT’s ability to interpret the source.

Benjamin named his daughter Delia Etta in honor of his two older sisters, Delia and Henrietta (who Benjamin affectionately called Etta). The choice had particular resonance for the Curtis siblings, who were orphaned as young children. Naming Benjamin’s daughter after his two sisters was a way to strengthen the family bonds that had been so badly frayed by the loss of their parents. Of course, Benjamin never said any of this explicitly in his letter; it would have been obvious to his sister. It was obvious to me, too. After all, I’d spent many hours at the Huntington Library getting to know the Curtis family across dozens of letters just like this one. But without that context, a tiny transcription mistake - switching an “S” for a “D” - meant that ChatGPT missed an important part of this letter and its larger significance.

The Curtis letter demonstrates why using LLMs for historical research requires careful attention and disciplinary expertise. These tools are potentially quite powerful for aiding historians with some of the mechanics of primary source analysis, but they work best when we actively guide and verify their output. Which is why I would encourage you to take the plunge and start experimenting with these tools for your own research.

If you’re just getting started, here is some general advice:

- Think of an LLM as a research assistant. An extremely knowledgeable, well-read, and over-caffeinated research assistant. They can accomplish quite remarkable things but they will also make mistakes - like mixing up “

Selia” and “Delia”. Unlike most research assistants, LLMs will occasionally hallucinate (ie. make up) things that sound quite convincing. Don’t take their output at face value and always check their work. - Start small and be specific. Begin with shorter, discrete sources. These are easier for the model to work with and easier for you to evaluate. Just as you would with a research assistant, provide detailed guidance to the LLM about what, exactly, you want it to do. Generally speaking, it helps to assign it a role (ie. “You are a historical research assistant…”) before carefully spelling out the task that you want it to do.

I tend to think prompt engineering is an overrated skill. But starting with something like the CREATE framework (or any of the seemingly hundreds of similar acronyms out there) can help you get the hang of writing effective prompts. - Choose the right tool. This is tricky, given how quickly things change in Generative AI. As of October 2024, if I had to choose one off-the-shelf model for working with primary sources I would probably go with the paid version of GPT-4o (which requires a $20/month subscription). This lets you analyze “multimodal” sources (like photographs) along with using Custom GPTs tailored for specific tasks (like handwriting transcription).

Ethan Mollick wrote a a nice breakdown of the major "frontier" models as of September 2024. - Keep the human in the loop. LLMs work best when you actively work alongside them as a form of “co-intelligence”. Remember: you are the expert. Iterate back and forth. If the model misses something, point out what it got wrong and ask it to revise. I’ve found that this process of iteration (the “chat” part of ChatGPT) isn’t just about fixing mistakes; it’s actually a useful way for me to refine my own thoughts and interpretations.

What does it mean for historians to “keep the human in the loop”? It underscores the fact that when we read a primary source we aren’t just trying to accurately decode individual words on a page. We’re looking for subtle details and connections that aren’t always obvious. We’re listening for the silences of who’s left out and what’s left unsaid. We’re recognizing what is meaningful or surprising. We’re situating a source within the broader context of its time. All of this requires a certain degree of specialized expertise and experience that, for all of their massive training sets and sophisticated language capacities, Large Language Models can’t fully grasp on their own. At least, not yet.