The Perpetual Sunrise of Methodology

[The following is the text of a talk I prepared for a panel discussion about authoring digital scholarship for history with Adeline Koh, Lauren Tilton, Yoni Appelbaum, and Ed Ayers at the 2015 American Historical Association Conference.]

I’d like to start with a blog post that was written almost seven years ago now, titled “Sunset for Ideology, Sunrise for Methodology?” In it, Tom Scheinfeldt argued that the rise of digital history represented a disciplinary shift away from big ideas about ideology or theory and towards a focus on “forging new tools, methods, materials, techniques, and modes or work.” Tom’s post was a big reason why I applied to graduate school. I found this methodological turn thrilling - the idea that tools like GIS, text mining, and network analysis could revolutionize how we study history. Seven years later the digital turn has, in fact, revolutionized how we study history. Public history has unequivocally led the charge, using innovative approaches to archiving, exhibiting, and presenting the past in order to engage a wider public. Other historians have built powerful digital tools, explored alternative publication models, and generated online resources to use in the classroom.

But there is one area in which digital history has lagged behind: academic scholarship. To be clear: I’m intentionally using “academic scholarship” in its traditional, hidebound sense of marshaling evidence to make original, explicit arguments. This is an artificial distinction in obvious ways. One of digital history's major contributions has, in fact, been to expand the disciplinary definition of scholarship to include things like databases, tools, and archival projects. The scholarship tent has gotten bigger, and that’s a good thing. Nevertheless there is still an important place inside that tent for using digital methods specifically to advance scholarly claims and arguments about the past.

In terms of argument-driven scholarship, digital history has over-promised and under-delivered. It’s not that historians aren’t using digital tools to make new arguments about the past. It’s that there is a fundamental imbalance between the proliferation of digital history workshops, courses, grants, institutes, centers, and labs over the past decade, and the impact this has had in terms of generating scholarly claims and interpretations. The digital wave has crashed headlong into many corners of the discipline. Argument-driven scholarship has largely not been one of them.

There are many reasons for this imbalance, including the desire to reach a wider audience beyond the academy, the investment in collection and curation needed for electronic sources, or the open-ended nature of big digital projects. All of these are laudable. But there is another, more problematic, reason for the comparative inattention to scholarly arguments: digital historians have a love affair with methodology. We are infatuated with the power of digital tools and techniques to do things that humans cannot, such as dynamically mapping thousands of geo-historical data points. The argumentative payoffs of these methodologies are always just over the horizon, floating in the tantalizing ether of potential and possibility. At times we exhibit more interest in developing new methods than in applying them, and in touting the promise of digital history scholarship rather than its results.

What I’m going to do in the remaining time is to use two examples from my own work to try and concretize this imbalance between methods and results. The first example is a blog post I wrote in 2010. At the time I was analyzing the diary of an eighteenth-century Maine midwife named Martha Ballard, made famous by Laurel Ulrich’s prize-winning A Midwife’s Tale. The blog post described how I used a process called topic modeling to analyze about 10,000 diary entries written by Martha Ballard between 1785 and 1812. To grossly oversimplify, topic modeling is a technique that automatically generates groups of words more likely to appear with each other in the same documents (in this case, diary entries). So, for instance, the technique grouped the following words together:

gardin sett worked clear beens corn warm planted matters cucumbers gatherd potatoes plants ou sowd door squash wed seeds

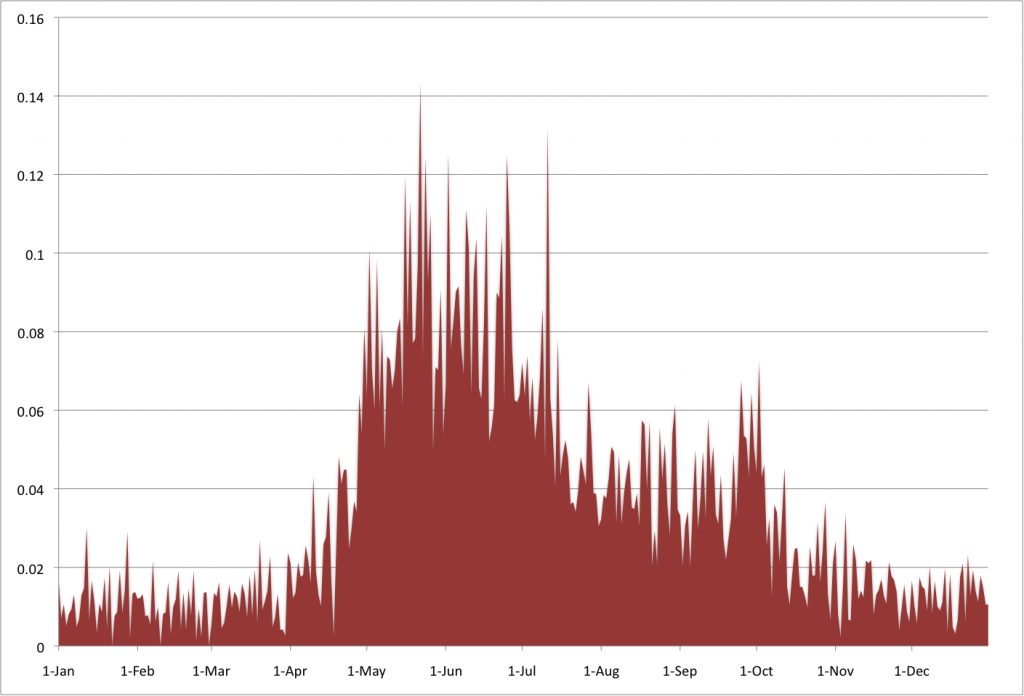

As a human reader it’s pretty clear that these are words about gardening. Once I generated this topic, I could track it across all 10,000 entries. When I mashed twenty-seven years together, it produced this beautiful thumbprint of a New England growing season.

Interest in topic modeling took off right around the time that I wrote this post, and pretty soon it started getting referenced again and again in digital humanities circles. Four and a half years later, it has been viewed more than ten thousand times and been assigned on the syllabi of at least twenty different courses. It’s gotten cited in books, journal articles, conference presentations, grant applications, government reports, white papers, and, of course, other blogs. It is, without a doubt, the single most widely read piece of historical writing I have ever produced. But guess what? Outside of the method, there isn’t anything new or revelatory in it. The post doesn’t make an original argument and it doesn’t further our understanding of women’s history, colonial New England, or the history of medicine. It largely shows us things we already know about the past - like the fact that people in Maine didn’t plant beans in January.

People seized on this blog post not because of its historical contributions, but because of its methodological contributions. It was like a magic trick, showing how topic modeling could ingest ten thousand diary entries and, in a matter of seconds, tell you what the major themes were in those entries and track them over time, all without knowing the meaning of a single word. The post made people excited for what topic modeling could do, not necessarily what it did do; the methodology's potential, not its results.

About four years after I published my blog post on Martha Ballard, I published a very different piece of writing. This was an article that appeared in last June’s issue of the Journal of American History, the first digital history research article published by the journal. In many ways it was a traditional research article, one that followed the journal’s standard peer review process and advanced an original argument about American history. But the key distinction was that I made my argument using computational techniques.

The starting premise for my argument was that the late nineteenth-century United States has typically been portrayed as a period of integration and incorporation. Think of the growth of railroad and telegraph networks, or the rise of massive corporations like Standard Oil. In nineteenth-century parlance: “the annihilation of time and space.” This existing interpretation of the period hinges on geography - the idea that the scale of locality and region were getting subsumed under the scale of nation and system. I was interested in how these integrative forces actually played out in the way people may have envisioned the geography of the nation.

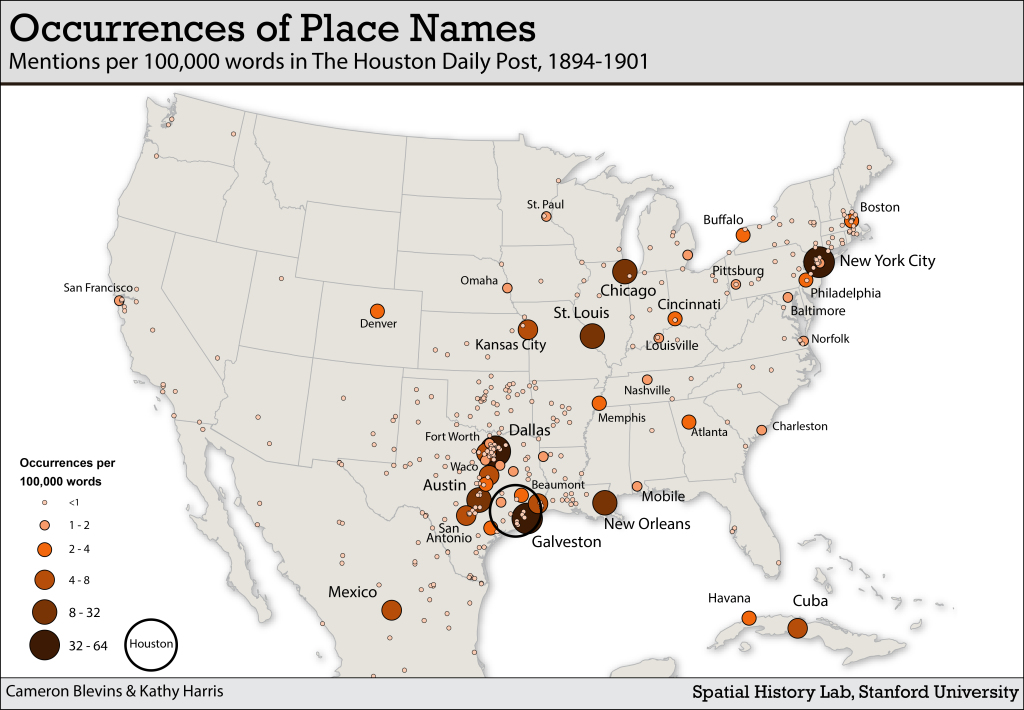

So I looked at a newspaper printed in Houston, Texas, during the 1890s and wrote a computer script that counted the number of times the paper mentioned different cities or states. In effect, how one newspaper crafted an imagined geography of the nation. What I found was that instead of creating a standardized, nationalized view of the world we might expect, the newspaper produced space in ways that centered on the scale of region far more than nation. It remained overwhelmingly focused on the immediate sphere of Texas, and even more surprisingly, on the American Midwest. Places like Kansas City, Chicago, and St. Louis were far more prevalent than I was expecting, and from this newspaper's perspective Houston was more of a midwestern city than a southern one.

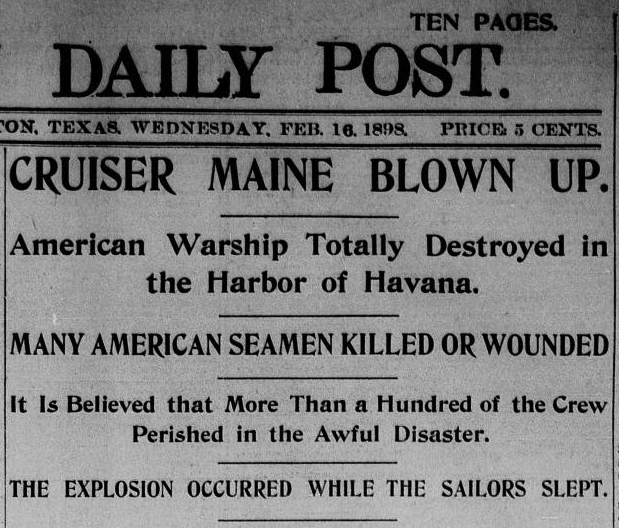

I would have never seen these patterns without a computer. And in trying to account for this pattern I realized that, while historians might enjoy reading stuff like this...

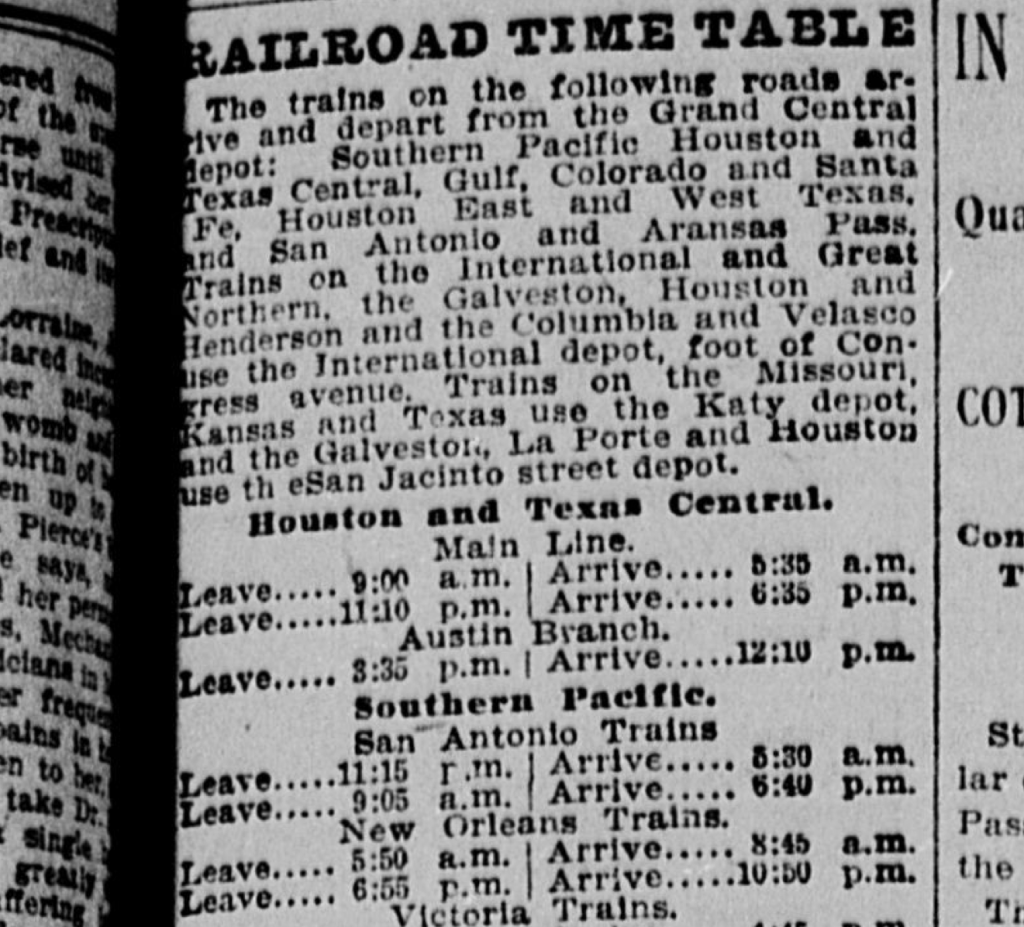

...newspapers often look a lot more like this:

All of this really boring stuff - commodity prices, freight rates, railroad timetables, classified ads - made up a shockingly large percentage of content. Once you include the boring stuff, you get a much different view of the world from Houston in the 1890s. I ended up arguing that it was precisely this fragmentary, mundane, and overlooked content that explained the dominance of regional geography over national geography. I never would have been able to make this argument without a computer.

The article offers a new interpretation about the production of space and the relationship between region and nation. It issues a challenge to a long-standing historical narrative about integration and incorporation in the nineteenth-century United States. By publishing it in the Journal of American History, with all of the limitations of a traditional print journal, I was trying to reach a different audience from the one who read my blog post on topic modeling and Martha Ballard. I wanted to show a broader swath of historians that digital history was more than simply using technology for the sake of technology. Digital tools didn’t just have the potential to advance our understanding of American history - they actually did advance our understanding of American history.

To that end, I published an online component that charted the article's digital approach and presented a series of interactive maps. But in emphasizing the methodology of my project I ended up shifting the focus away from its historical contributions. In the feedback and conversations I’ve had about the article since its publication, the vast majority of attention has focused on the method rather than the result: How did you select place-names? Why didn’t you differentiate between articles and advertisements? Can it be replicated for other sources? These are all important questions, but they skip right past the arguments that I’m making about the production of space in the late nineteenth century. In short: the method, not the result.

I ended my article with a familiar clarion call:

Technology opens potentially transformative avenues for historical discovery, but without a stronger appetite for experimentation those opportunities will go unrealized. The future of the discipline rests in large part on integrating new methods with conventional ones to redefine the limits and possibilities of how we understand the past.

This is the rhetorical style of digital history. While reading through conference program I was struck by just how many abstracts about digital history used the words “potential,” “promise,” “possibilities,” or in the case of our own panel, “opportunities.” In some ways 2015 doesn’t feel that different from 2008, when Tom Scheinfeldt wrote about the sunrise of methodology and the Journal of American History published a roundtable titled "The Promise of Digital History." I think this is telling. Academic scholarship’s engagement with digital history seems to operate in a perpetual future tense. I’ve spent a lot of my career talking about what digital methodology can do to advance scholarly arguments. It’s time to start talking in the present tense.